Why Links Matter

- Core Principle:Links are not just navigation paths; they are implicit endorsements and votes of confidence.

- Structural Signals:They provide a rich structural layer that complements content (keyword) analysis.

- Authority & Quality:Link analysis allows search engines to quantify the importance and qualityof a page.

- A link from an authoritative source transfers trust and relevance.

- Crucial for solving the Quality/Authorityproblem in search ranking.

Historical Context: A Breakthrough

- Pre-Link Analysis (The Past):Ranking was based almost entirely on term frequency, density, and content matching. This was highly vulnerable to keyword stuffing and manipulation.

- The Breakthrough (Late 1990s):The introduction of link analysis (specifically PageRank and HITS) revolutionized ranking.

- The Change:It introduced an intrinsic, query-independent measure of importance that was difficult to artificially inflate, leading to significantly better search quality.

The Web as a Graph

- Definition:The entire World Wide Web can be modeled as a massive, directed graph.

- Nodes (Vertices):Represent individual web pages, documents, or resources (e.g., a specific URL).

- Edges (Directed Links):Represent the hyperlinks connecting the pages.

- The connection is directed: A link from A to B is not the same as a link from B to A.

- Structure:The web graph is characterized by its enormous scale, high sparsity(few links per page), and complex connectivity.

Introduction to Link Analysis

The Web as a Graph

7

PageRank

18

Hubs and Authorities (HITS Algorithm)

6

Hubs and Authorities (HITS Algorithm)—Choosing the Subset of the Web

3

Advanced Link-Based Models

6

Link Analysis Beyond Web Search

3

Modern Trends in Link Analysis

3

Evaluation and Critiques

3

The Web as a Graph

Structural Properties: Scale and Sparsity

- Scale (Massive Size):The graph contains billions of nodes (pages) and trillions of edges (links). Processing this scale requires distributed computing (e.g., MapReduce).

- Sparsity:The transition matrix representing the graph is extremely sparse.

- Sparsity means the number of actual links is tiny compared to the total possible number of links between all pages.

- This property is key for efficient storage and computation.

Structural Properties: Connectivity

- The Bow-Tie Structure (Connectivity):Research shows the Web is not uniformly connected but resembles a “bow tie.”

- SCC (Strongly Connected Component):A large core where every page is reachable from every other page.

- IN Component:Pages that link intothe SCC.

- OUT Component:Pages that are linked out ofthe SCC.

- Power-Law Distribution:The distribution of incoming links (in-degree) follows a power law.

- A very few pages (hubs like Wikipedia, major news sites) have a disproportionately large number of incoming links.

- Most pages have very few incoming links.

Directed vs. Undirected Graphs

- Directed Graph (The Reality):The Web is inherently directed.

- A link from Page A to Page B ($A \to B$) does not imply a reciprocal link ($B \to A$).

- Link analysis algorithms like PageRank rely entirely on this directionality to model the flow of authority.

- Undirected View (For Modeling):Occasionally, the graph can be treated as undirected to analyze relationships like co-citation or similarity, but never for authoritative ranking.

Components and Problems

- Strongly Connected Components (SCCs):Sets of pages where you can start at any page and reach any other page within the set by following links. Pages within an SCC share and circulate authority effectively.

- Dangling Nodes (Sink Nodes):These are pages that have incoming links but no outgoing links.

- Problem:In the random surfer model (used by PageRank), the surfer gets “stuck” here, leading to non-convergence or rank leakage.

- Solution:Algorithms handle these nodes by assuming a jump (teleportation) to a random page.

Anchor Text: Definition and Role

- Definition:Anchor text is the clickable, visible text within a hyperlink tag.

- Example: <a href=”www.target.com”>**This is the Anchor Text**</a>

- Role:Anchor text acts as a label or descriptionprovided by the linking pageto describe the content of the target page.

- Why it’s powerful:It is often a concise, human-generated summary of the target page’s content, which can be more accurate than the target page’s own title or metadata.

Anchor Text as a Relevance Signal

- Aggregation:Search engines aggregate all anchor text pointing to a single page.

- This aggregated text is treated as a highly trusted set of keywords associated with the target page.

- Relevance:It is a powerful signal for query relevance. If hundreds of external sites use the phrase “best travel guides” in their anchor text when linking to a specific blog, that blog is highly relevant for that query.

- Combining Signals:Anchor text is one of the most effective ways to combine the content of one page (the anchor) with the authority of the target page.

Retrieval and Ranking Examples

- Example 1: Wikipedia:Wikipedia pages are highly authoritative (high PageRank) and receive links with descriptive anchor text (e.g., “Battle of Hastings”). This combination makes them rank highly for relevant, specific queries.

- Example 2: Corporate Pages:A company’s homepage might not contain the phrase “Investor Relations,” but if all financial news sites link to it using that phrase as anchor text, the page will rank for that query.

- Ranking Mechanism:The anchor text terms are treated as if they were part of the target page’s document index, allowing content-based search engines to leverage link structure indirectly.

PageRank

PageRank: The Core Intuition

- Definition:PageRank (PR) is an algorithm that measures the intrinsic importance or authority of a web page based on its link structure.

- Intuition: “Votes” are Weighted:A page is important if it is linked to by many pages, and especially if it is linked to by many important pages.

- A link from CNN is worth far more than a link from a new personal blog.

- Recursive Definition:The rank of a page depends on the ranks of the pages linking to it. This recursive dependency forms the basis of the calculation.

- Core Principle:A page gains a high rank if it is linked to by many pages that alreadyhave high rank. Links act as endorsements, and the value of that endorsement is proportional to the source page’s own rank.

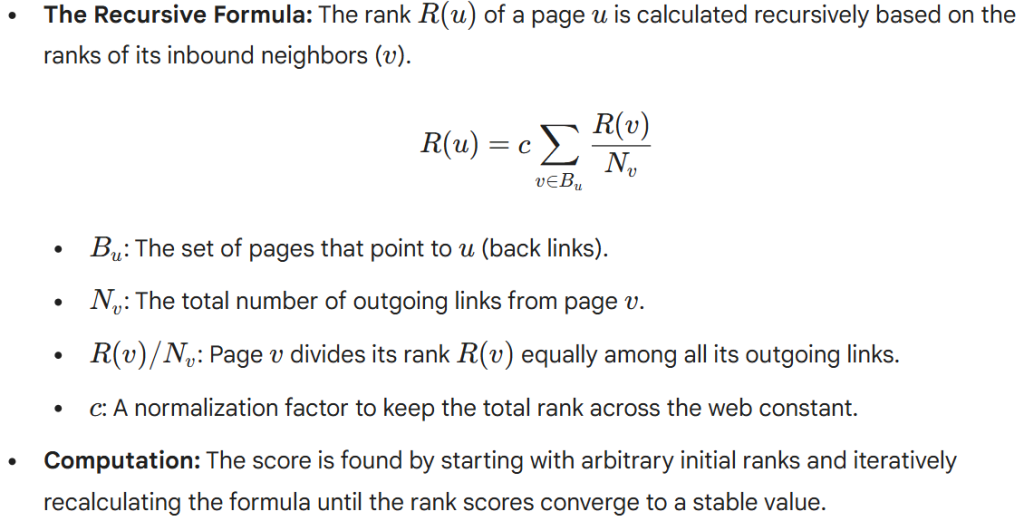

Simple PageRank

PageRank (Suffers “Rank Sink” Problem)

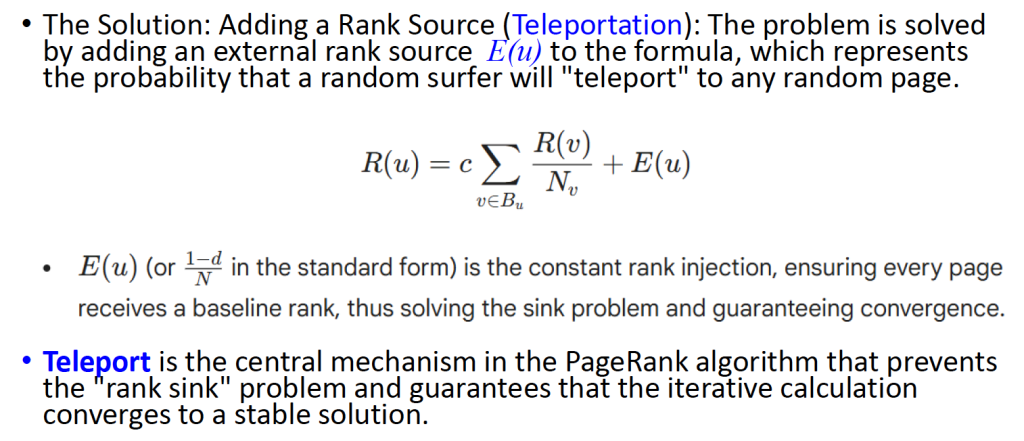

- The Flaw: Rank Sink Problem:The Simple PageRank algorithm suffers from a critical flaw when dealing with pages that have no outgoing links (Dangling Nodes).

- Mechanism:If a page only points to itself (or simply has no out-links), the rank it accumulates is trappedat that node and cannot be redistributed to the rest of the web.

- Consequence:This “rank sink” causes the total rank across the entire web graph to gradually drain away, leading to a system where the total rank is no longer conserved, and the ranks of other pages are unfairly reduced.

Teleportation

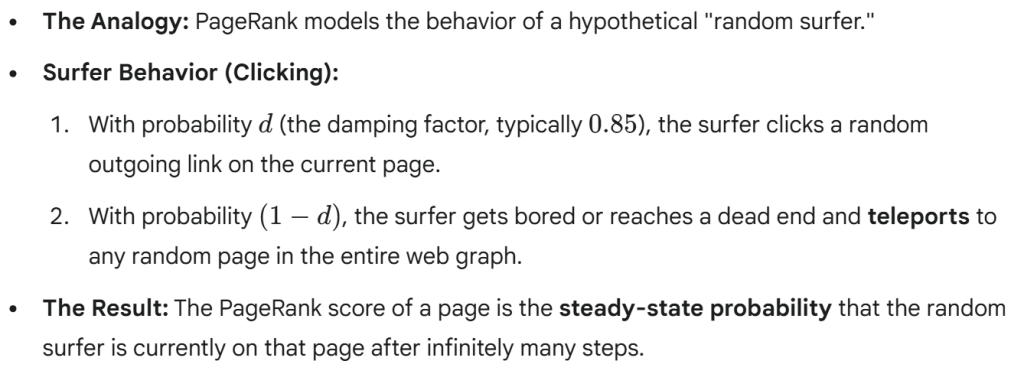

The Random Surfer Model

Importance of Link-Based Ranking

- Query-Independent Authority:PageRank calculates a score for every page beforea user enters a query. This score measures the page’s general, global authority.

- Separation of Concerns:It cleanly separates the concepts of:

- •Authority (Quality):Measured by PageRank (link structure).

- •Relevance (Topic):Measured by keyword matching (content).

- Breakthrough:This pre-computed authority score provides a robust mechanism to combat content spam, as spammers cannot easily manipulate external links.

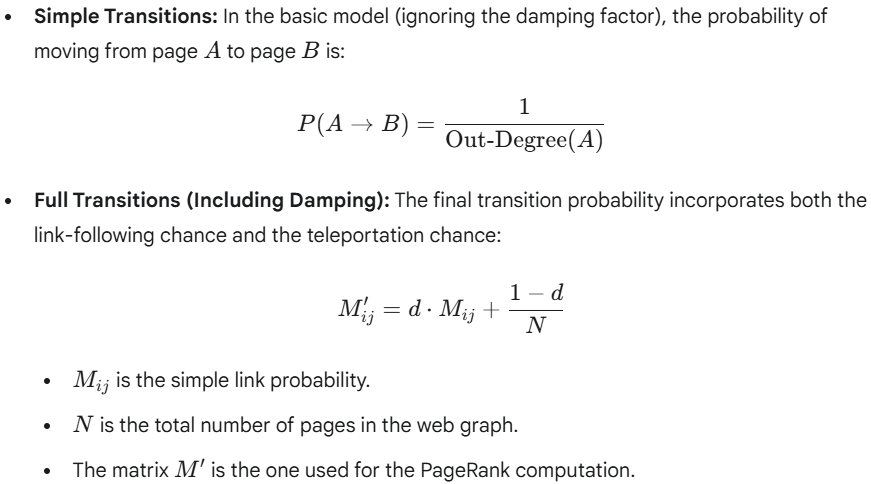

Markov Chains: The Basics

- Formal Model:The random surfer model is mathematically equivalent to a Markov Chain.

- States:The states in the chain are the individual web pages.

- Memoryless Property:The probability of moving to the next page depends onlyon the current page, not on the sequence of pages visited previously.

- Transition Matrix (M):This matrix represents all links in the web graph.

- An entryMijis the probability of moving from page jto page i.

Transition Probabilities

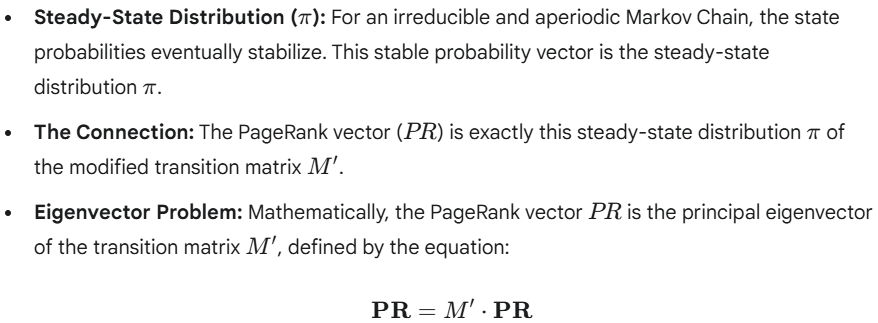

Steady-State and Connection to PageRank

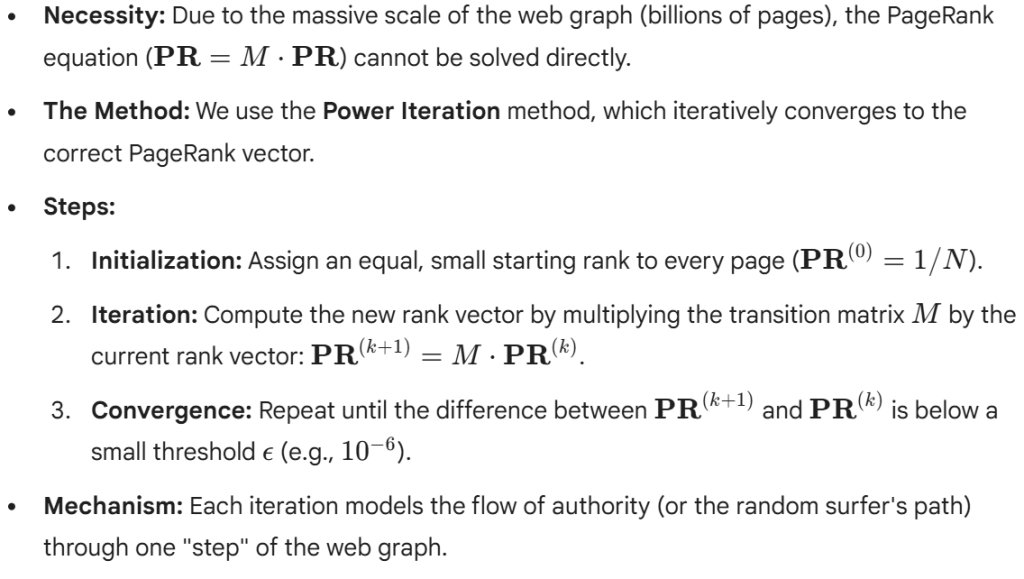

Iterative Computation (Power Iteration)

Handling Dangling Nodes

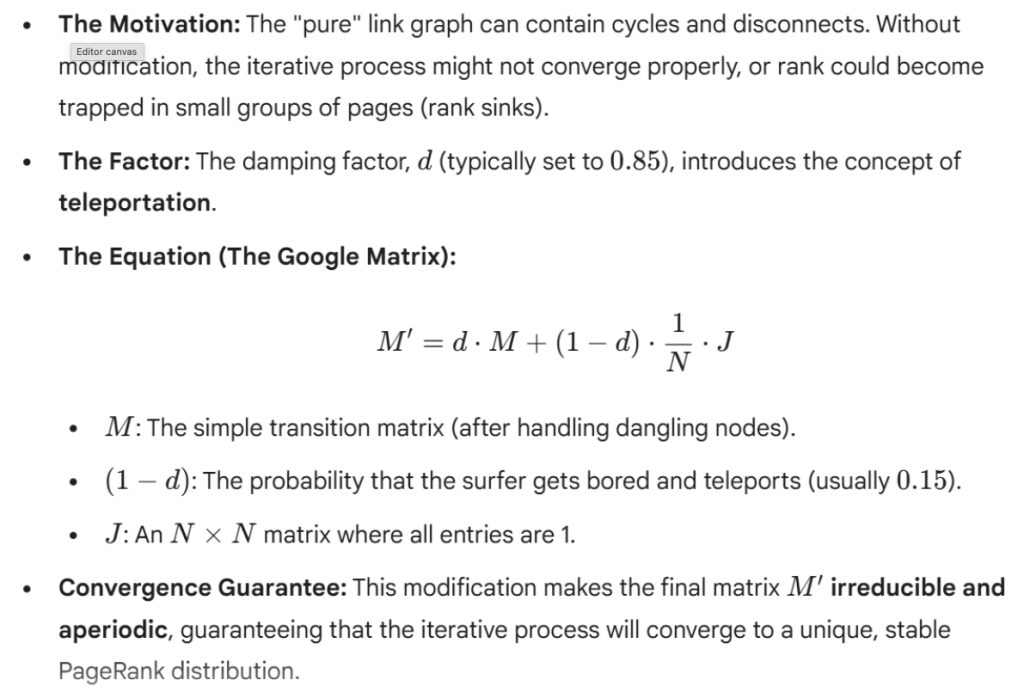

The Damping Factor (d)

Convergence and Stability

- Convergence Speed:The PageRank algorithm typically converges relatively quickly—usually within 50 to 100 iterations—to an acceptable tolerance level, even for the massive web graph.

- Stability:The dfactor ensures numerical stability. The PageRank scores change slowly over time because the underlying link structure of the web is relatively stable.

- Update Frequency:Because the computation is massive, PageRank is not calculated in real-time. It’s recomputed periodically (e.g., weekly or monthly) on the latest snapshot of the web graph, and these pre-computed scores are used in the live ranking system.

Scalability and Large-Scale Computation

- The Bottleneck:The primary challenge is not the complexity of the algorithm but the sheer sizeof the transition matrix (billions of edges).

- Distributed Computing:PageRank calculation is performed using distributed processing frameworkslike MapReduce(or similar modern big data platforms).

- MapReduceImplementation:

- Map Phase:Each page is processed by a mapper which “maps” its current PageRank score and distributes it to all pages it links to, along with the transition probability.

- Reduce Phase:The reducer gathers all incoming rank contributions for a specific page iand sums them up to calculate the new PR(i) for the next iteration.

- This approach effectively parallelizes the large matrix-vector multiplication, making the computation feasible across thousands of servers.

Motivation: Why Context Matters

- The Limitation of Global PageRank:Standard PageRank assigns a single “authority score” to every page. It assumes that if a page is important, it is universally important.

- The Context Problem:Authority is inherently topic-dependent.

- Example:A highly authoritative page about “Jaguar cars” might receive a high global PageRank, but it is irrelevant to a user researching “Jaguar” the animal.

- The Goal:To move from “Global Importance” to “Contextual Importance,”ensuring that ranking reflects authority within a specific domain of interest rather than just general popularity across the entire web.

Topic-Sensitive PageRank (TSPR)

- The Mechanism: Biased Teleportation:

- In standard PageRank, the random surfer teleports to anypage with equal probability (1/N) when they get bored.

- In TSPR, we restrict the teleportation set to a specific Topic(e.g., “Sports” or “Cooking”).

- The Seed Set (S):A set of pages pre-classified as highly relevant to a given topic (e.g., based on directory listings).

- The Calculation:When the random surfer “teleports,” they jump exclusively to one of these seed pages in set S. This biases the entire rank distribution towards pages that are topologically close to the topic seeds.

Personalized PageRank (PPR)

- User-Specific Bias:PPR extends the TSPR idea from a topic to an individual user’s interests.

- The Personalized Seed Set (P):The teleport set Pis determined by the user’s history, profile, bookmarks, or recent searches.

- The Mechanism:The random surfer is biased to jump back to pages the specific userhas previously deemed important or relevant.

- Result:This results in a unique PageRank vector calculated for each user or group, providing rankings tailored to their personal preferences and interests.

Applications in Search and Recommendation

- Focused Search:TSPR is used to pre-calculate multiple PageRank vectors (e.g., TSPR-Science, TSPR-Arts). When a user submits a query, the system classifies the intentand uses the corresponding TSPR vector to weight results, boosting relevant domain authorities.

- Recommendation Systems:PPR can be used to recommend new content. Pages that have high PPR scores relative to a user’s interest profile but low global PageRank are excellent candidates for discovery.

- Example:TSPR helps a search for “Mars” by prioritizing pages authoritative in “Astronomy” over those authoritative in “Candy Bars,” ensuring topical relevance is reinforced by link structure.

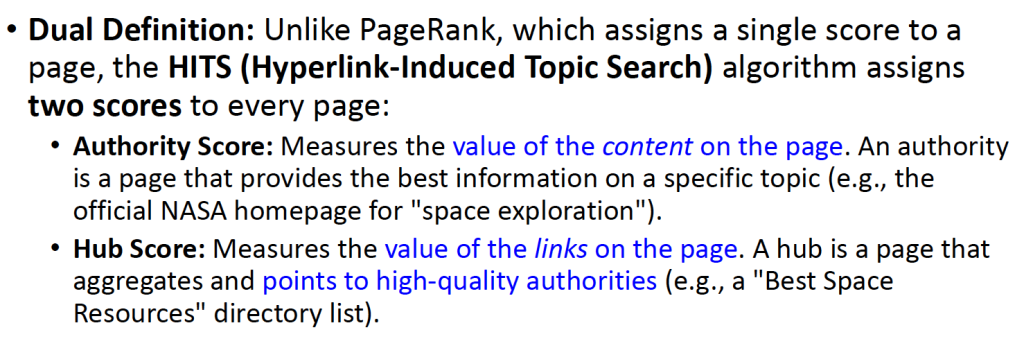

Hubs and Authorities (HITS Algorithm)

Concept of Hubs and Authorities

The Intuition: Mutually Recursive Relationship

- The Core Philosophy:The definitions of Hubs and Authorities are circular and mutually reinforcing:

- A Good Hubis a page that points to many Good Authorities.

- A Good Authorityis a page that is pointed to by many Good Hubs.

- Recursive Quality:You cannot calculate one without the other. The algorithm exploits this relationship to identify high-quality communities of pages relevant to a specific query.

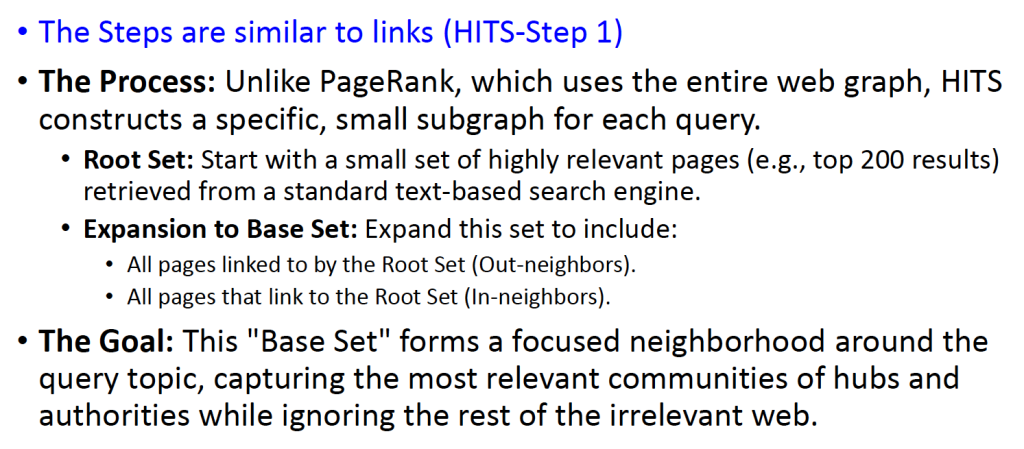

HITS Algorithm Step 1: Constructing the Graph

- Root Set:The algorithm starts by retrieving a small set of relevant pages (e.g., the top 200 results) from a standard text-based search engine for the user’s query.

- Expansion to Base Set:To capture the link structure, the Root Set is expanded to form the Base Set:

- Add any page that links toa page in the Root Set.

- Add any page that is linked to bya page in the Root Set.

- Goal:This creates a focused subgraph of the web that contains the most relevant communities for that specific query.

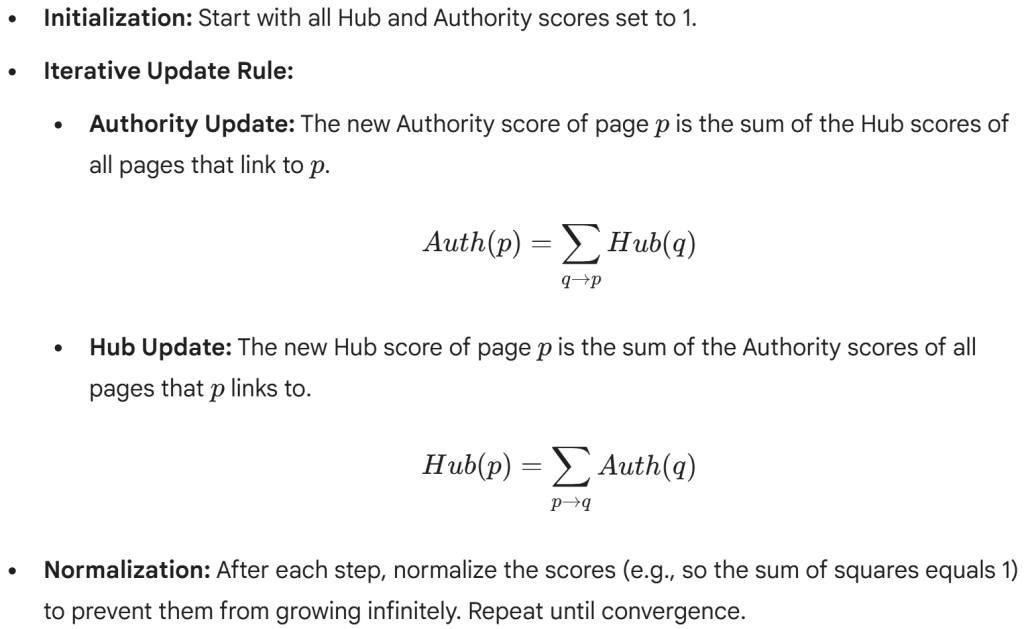

HITS Algorithm Step 2: Iterative Updates

Normalization step HITS algorithm (Part step 2 Previous slide): Controlling Score Growth

- The Problem:The iterative update process (where Hub scores sum Authorities, and Authority scores sum Hubs) causes the scores to increase rapidly with every step. If left unchecked, all scores would quickly approach infinity, losing their comparative meaning.

- The Solution:After every Authority Update step and every Hub Update step, the scores must be normalized. This ensures the resulting scores are scaled back into a comparable range, usually between 0 and 1.

Normalization step HITS algorithm: Controlling Score Growth

Hubs and Authorities (HITS Algorithm)—Choosing the Subset of the Web

Query-Dependent Subgraph Selection

Practical Considerations and Limitations

- Computational Cost:Because the graph is query-specific, the HITS algorithm must be run in real-timeafter the user submits a query. This makes it significantly slower and more computationally expensive than pre-computed algorithms like PageRank.

- Topic Drift:A major limitation is “Topic Drift.” If the Root Set contains a few pages from a very tightly-knit but irrelevant community (e.g., a general query about “Jaguars” drifting entirely into “Jaguar Cars” due to strong linking among car sites), the algorithm can converge on the wrong topic, ignoring the user’s broader intent.

Vulnerabilities: Link Spam and SEO

Advanced Link-Based Models

TrustRank: Combatting Web Spam

TrustRank: Combatting Web Spam

- The Challenge:“Link Farms” and spam pages artificially inflate PageRank, making standard link analysis unreliable for quality control.

- The Semi-Supervised Approach:TrustRankassumes that good pages rarely link to bad pages.

- Oracle Set:Start with a small, manually verified set of trustworthy “seed” pages (e.g., universities, government sites).

- Trust Propagation:Propagate trust scores outward from these seeds using a biased PageRank calculation.

- Trust Attenuation:Trust dampens as it moves further from the seed set. A page 1 click away from a seed is highly trusted; a page 5 clicks away is less so.

- Spam Mass:By comparing a page’s standard PageRank with its TrustRank, we can estimate its “Spam Mass”—the portion of its rank likely derived from manipulation.

TrustRank—The Algorithm

- The Algorithm:

- Seed Selection:Identify a small, high-quality set of trusted “Seed Pages” (e.g., .gov, .edusites) that are manually verified as non-spam.

- Biased PageRank:Run a modified PageRank calculation where the random surfer teleports onlyto these trusted seed pages (similar to Topic-Sensitive PageRank).

- Trust Attenuation:Trust scores decrease as you move further away from the seed set. Pages with high TrustRankscores are legitimate; those with low scores are potential spam.

- Application:Used primarily to filter out spam from search results and demote pages involved in manipulative linking schemes.

SALSA (Stochastic Approach for Link-Structure Analysis)

- The Problem with HITS:The original HITS algorithm is vulnerable to the “Tightly Knit Community” (TKC) effect, where a small group of mutually linking pages (even if irrelevant) can dominate the results.

- The SALSA Solution:SALSA modifies HITS by casting the problem as a Random Walkon a bipartite graph of hubs and authorities.

- Mechanism:Instead of summing all scores (which causes explosion), SALSA normalizes the link weights based on the number of links.

- It divides the authority weight by the number of in-links and hub weight by the number of out-links.

- Result:This stochastic approach is significantly more stable and less prone to manipulation or topic drift than the original HITS algorithm.

Integration with Content-Based Signals

- Authority vs. Relevance:Link analysis measures staticglobal authority, but it doesn’t inherently measure relevanceto a specific user query.

- The Hybrid Signal:Modern search engines do not rank by PageRank alone.

- Relevance Score:Computed using content models (TF-IDF, BM25, BERT) based on query term matching.

- Quality Score:Computed using link models (PageRank, TrustRank).

- Ranking Fusion:The final rank is a function (often determined by Machine Learning / Learning-to-Rank models) that combines these signals. A page needs bothhigh relevance and high authority to rank well.

Anchor Text Weighting and Hybrid Approaches

- Weighted Links:Not all links are created equal. Advanced models assign different weights to edges based on the Anchor Text.

- A link with anchor text “click here” carries less topical weight than a link with anchor text “neuroscience research paper.”

- Structural Weighting:Links are also weighted by their position:

- Editorial Links:Links inside the main body text are trusted more (higher weight).

- Navigational Links:Links in footers or sidebars are trusted less (lower weight).

- Hybrid Graph Models:Newer approaches construct heterogeneous graphs that include not just pages, but users, queries, and clicks as nodes, allowing link analysis to propagate through user behavior data as well as web structure.

Link Analysis Beyond Web Search

Citation Analysis in Academic Publishing

- The Origin of Link Analysis:Web link analysis was heavily inspired by bibliometrics—the statistical analysis of academic citations.

- Direct Parallels:

- Paper = Node:Each academic paper is a node in the citation graph.

- Citation = Link:A citation from Paper A to Paper B is a directed edge, signaling “credit” or “prior art.”

- Metrics:

- Impact Factor:Similar to in-degree centrality, measuring the average number of citations received by articles in a journal.

- h-index:A metric for author-level authority, balancing productivity (number of papers) and citation impact (number of links).

- Bibliographic Coupling & Co-citation:These concepts (papers citing the same work, or being cited together) are the direct ancestors of the HITS hub/authority model.

Social Network Analysis (SNA) Parallels

- Mapping the Social Graph:Link analysis algorithms are directly applicable to social networks (Twitter, Facebook, LinkedIn).

- Follows/Friending = Links:These edges define the structure of the community.

- Centrality Measures:

- Degree Centrality:Users with the most followers (Celebrities/Hubs).

- PageRank:Users followed by other influential users(Thought Leaders).

- BetweennessCentrality:Users who act as bridges or “gatekeepers” between different social groups or communities.

- Application:Identifying key influencers for marketing, detecting communities (clustering), and predicting information spread (virality).

Knowledge Graphs and Entity Linking

- From Documents to Things:Modern search analyzes a graph of entities(people, places, concepts), not just documents.

- Nodes:Real-world entities (e.g., “Albert Einstein”).

- Edges:Relationships (e.g., “born in,” “won prize”).

- Link Analysis for Disambiguation:

- Entity Linking:When a page mentions “Jaguar,” the system looks at the link structure of the surrounding text and entities to decide if it maps to the Animalnode or the Carnode in the Knowledge Graph.

- Relation Strength:Algorithms similar to PageRank are used to score the “confidence” of a fact or relationship within the graph, filtering out noise.

Modern Trends in Link Analysis

Graph Neural Networks (GNNs)

- The Modern Evolution:Traditional PageRank calculates a single scalar score. Graph Neural Networks (GNNs)learn a high-dimensional vector representation(embedding) for every node.

- Mechanism:

- GNNs (like GraphSAGEor GCN) aggregate information from a node’s neighbors.

- They learn to encode both the graph structure(who links to whom) and the node content(text on the page) simultaneously.

- Advantage:Unlike static PageRank, GNNs can generalize to new, unseen nodes (inductive learning) and capture complex, non-linear structural patterns that simple random walks miss.

Hybrid Approaches: Combining Signals

- The “Learning to Rank” Framework:Modern search engines do not rely on a single algorithm. They use Machine Learning models (e.g., Gradient Boosted Decision Trees) that take hundreds of features as input.

- The Input Features:

- Link Signals:PageRank, HITS Authority, Domain Trust.

- Content Signals:Keyword matching, semantic similarity (BERT).

- User Behavior: Click-through rates (CTR), dwell time, pogo-sticking.

- The Hybrid Model:The ML model learns the optimal weightfor each signal. For example, it might learn that Link Authority matters more for medical queries (“symptoms of flu”) but User Behavior matters more for trending news.

Ethical Considerations in Ranking

- The “Rich Get Richer” Effect:Link analysis creates a feedback loop. Top-ranked pages get more traffic, which leads to more people citing/linking to them, which further cements their top ranking.

- Consequence:It becomes very difficult for new, high-quality content to break into the top results.

- Bias Amplification:If the link structure reflects societal biases (e.g., linking predominantly to male authors in science), the algorithm will amplify this bias, presenting it as objective “authority.”

- Echo Chambers:Personalization algorithms (based on Personal PageRank) can trap users in filter bubbles, showing them only content that reinforces their existing worldview.

Evaluation and Critiques

Strengths and Limitations of Link Analysis

- Strengths:

- Hard to Fake:Unlike text (which anyone can edit), you cannot easily force authoritative sites to link to you.

- Global Wisdom:It crowdsources quality judgments from millions of webmasters.

- Pre-computable:Scores can be calculated offline (batch processing), enabling fast query-time retrieval.

- Limitations:

- Lag:It takes time for new links to be crawled and indexed.

- Sparsity:Many high-quality pages (especially new ones) have few or no links (“The Cold Start Problem”).

- Topic Drift:Pure link analysis can sometimes drift away from the user’s

Vulnerabilities to Manipulation

- Link Spam:The commercial value of high rankings led to a “Black Hat SEO” industry focused on gaming link algorithms.

- Common Attacks:

- Link Farms:Networks of sites created solely to link to each other.

- Paid Links:Buying links from high-authority sites without editorial oversight.

- Comment Spam:Bots posting links in blog comments or forums.

- The Countermeasures:Search engines developed sophisticated countermeasures (like Google’s Penguin update and TrustRank) to detect unnatural linking patterns and penalize the offenders.

Balancing Link Analysis with Modern ML

- The Shift:While links remain a strong signal for authority, modern ML (specifically Large Language Models and Transformers) has become superior for understanding relevance and intent.

- Current State:

- Content First:Deep learning models (like BERT) ensure the document actually answers the user’s question.

- Links as Validator:Link analysis acts as a “trust filter” or tie-breaker. If two pages have equally good answers, the one with better link authority wins.

- Diminishing Returns:As content understanding improves, the reliance on raw link counts is slowly decreasing, though it remains a fundamental pillar of web search.

Watch, Read, Listen

-

—

by

Join 900+ subscribers

Stay in the loop with everything you need to know.